Google's Nested Learning

🔥 𝗚𝗼𝗼𝗴𝗹𝗲’𝘀 𝗡𝗲𝘀𝘁𝗲𝗱 𝗟𝗲𝗮𝗿𝗻𝗶𝗻𝗴: 𝗛𝗼𝘄 𝗦𝗲𝗹𝗳-𝗠𝗼𝗱𝗶𝗳𝘆𝗶𝗻𝗴 𝗧𝗶𝘁𝗮𝗻𝘀 𝗠𝗲𝗿𝗴𝗲 𝗢𝗽𝘁𝗶𝗺𝗶𝘇𝗮𝘁𝗶𝗼𝗻 𝗮𝗻𝗱 𝗠𝗲𝗺𝗼𝗿𝘆

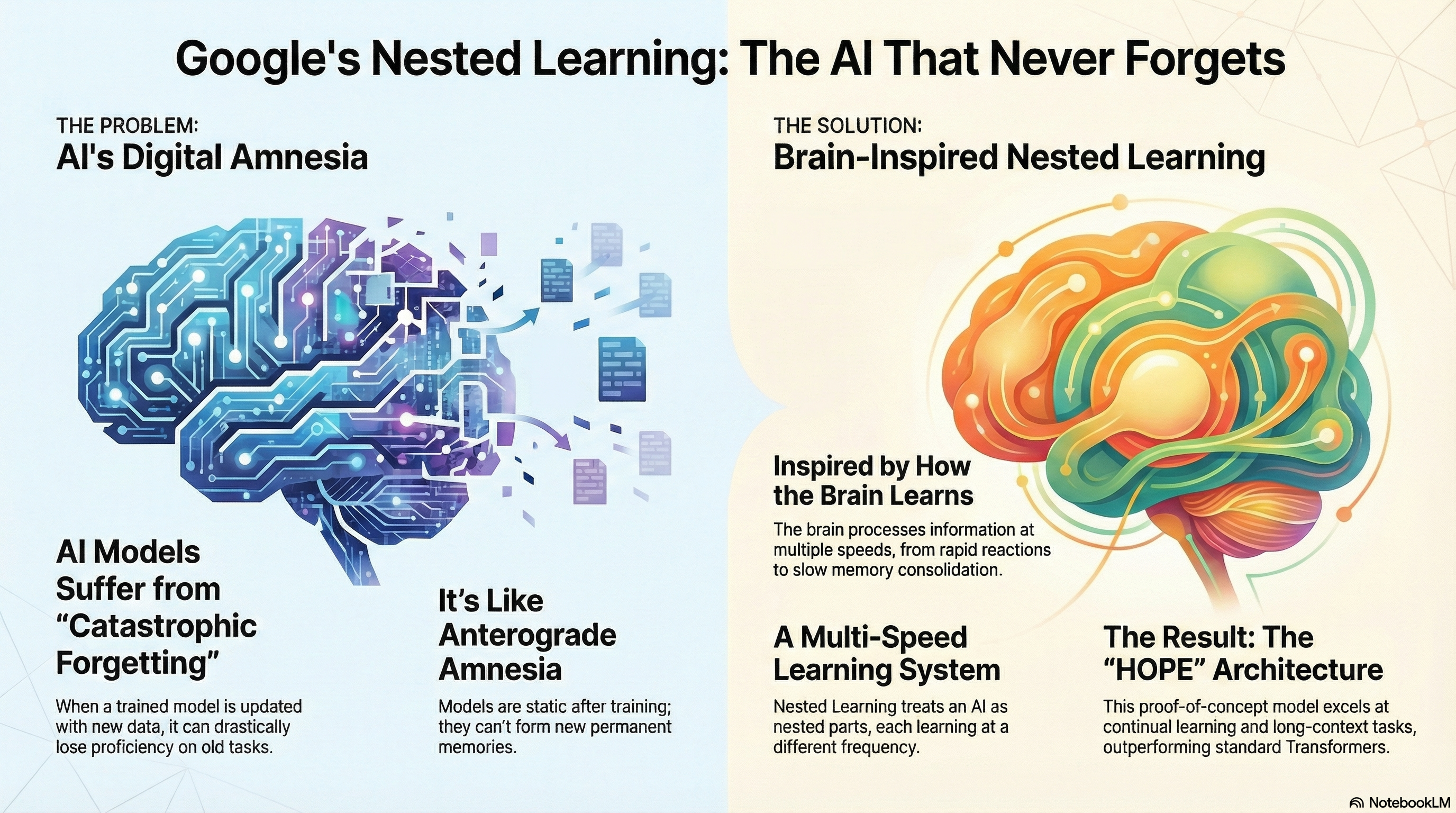

AI has a memory problem. Your brain can learn something new today without wiping yesterday. AI? It forgets instantly. 𝗖𝗮𝘁𝗮𝘀𝘁𝗿𝗼𝗽𝗵𝗶𝗰 𝗳𝗼𝗿𝗴𝗲𝘁𝘁𝗶𝗻𝗴 is its default setting.

For years our fix was “make it bigger.” More layers. More parameters. More GPUs.

Google’s latest research says: 𝗪𝗲’𝘃𝗲 𝗯𝗲𝗲𝗻 𝘀𝗰𝗮𝗹𝗶𝗻𝗴 𝘁𝗵𝗲 𝘄𝗿𝗼𝗻𝗴 𝗱𝗶𝗺𝗲𝗻𝘀𝗶𝗼𝗻.

🧠 𝗧𝗵𝗲 𝗕𝗿𝗮𝗶𝗻 𝗟𝗲𝗮𝗿𝗻𝘀 𝗟𝗶𝗸𝗲 𝗮𝗻 𝗢𝗿𝗰𝗵𝗲𝘀𝘁𝗿𝗮 — Not a Metronome

Your brain runs multiple learning tempos at once:

- 𝗚𝗮𝗺𝗺𝗮: fast, reactive

- 𝗕𝗲𝘁𝗮: active thinking

- 𝗧𝗵𝗲𝘁𝗮/𝗗𝗲𝗹𝘁𝗮: slow, deep storage

AI today forces every “instrument” to learn at the same speed… then shuts learning off entirely after training.

This is the 𝗶𝗹𝗹𝘂𝘀𝗶𝗼𝗻 𝗼𝗳 𝗱𝗲𝗽𝘁𝗵.

🎼 𝗡𝗲𝘀𝘁𝗲𝗱 𝗟𝗲𝗮𝗿𝗻𝗶𝗻𝗴: 𝗔𝗜 𝗪𝗶𝘁𝗵 𝗠𝘂𝗹𝘁𝗶𝗽𝗹𝗲 𝗟𝗲𝗮𝗿𝗻𝗶𝗻𝗴 𝗧𝗲𝗺𝗽𝗼𝘀 Google’s Nested Learning reframes a model as layers of learners, each updating at its own frequency:

- 𝗙𝗮𝘀𝘁 → immediate context

- 𝗠𝗲𝗱𝗶𝘂𝗺 → structural patterns

- 𝗦𝗹𝗼𝘄 → stable long-term memory

A multi-tempo learning system — just like your brain.

💥 𝗧𝗵𝗲 𝗕𝗿𝗲𝗮𝗸𝘁𝗵𝗿𝗼𝘂𝗴𝗵 𝗜𝗻𝘀𝗶𝗴𝗵𝘁: Optimizers = Memory Systems

Google shows:

- 🔹 Backprop is memory of surprise

- 🔹 Momentum is memory of gradient history

- 🔹 Adam is memory of long-term trends

- 🔹 Pre-training is massive long-term consolidation

Once you treat optimizers as memory…

- ➡️ the boundary between training and inference disappears.

- ➡️ models can update 𝙬𝙝𝙞𝙡𝙚 𝙩𝙝𝙚𝙮 𝙩𝙝𝙞𝙣𝙠.

That’s the basis of Google’s new architecture.

🎹 𝗛𝗢𝗣𝗘 — The Model Designed to Never Forget

HOPE blends two memory systems:

🎻𝗧𝗶𝘁𝗮𝗻𝘀 (𝗙𝗮𝘀𝘁 𝗠𝗲𝗺𝗼𝗿𝘆)

- 🔹 Self-modifying blocks that adapt during inference.

- 🔹 Real-time learning.

🎺𝗖𝗼𝗻𝘁𝗶𝗻𝘂𝘂𝗺 𝗠𝗲𝗺𝗼𝗿𝘆 𝗦𝘆𝘀𝘁𝗲𝗺 (𝗦𝗹𝗼𝘄 𝗠𝗲𝗺𝗼𝗿𝘆)

- 🔹 A chain of slow-updating memory modules that don’t get overwritten.

- 🔹 Long-term stability.

Together, HOPE learns in multiple tempos — like cognition, not computation.

🎺 The 𝗥𝗲𝘀𝘂𝗹𝘁𝘀?

Continual Learning:

- 🔹 Retains old tasks while learning new ones.

Zero catastrophic forgetting.

- 🔹 Needle-in-a-Haystack: Scored 100% where Transformers buckled under long contexts.

- 🔹 Language Modeling: Outperformed strong Transformer baselines even on standard LM tasks.

We’ve spent a decade building bigger models that forget easily. Transformers made AI powerful.

Nested Learning could make it 𝗮𝗹𝗶𝘃𝗲 — adaptive, continuous, memorable. And do what your brain does naturally: 𝗹𝗲𝗮𝗿𝗻 𝘁𝗼𝗱𝗮𝘆 𝘄𝗶𝘁𝗵𝗼𝘂𝘁 𝗹𝗼𝘀𝗶𝗻𝗴 𝘆𝗲𝘀𝘁𝗲𝗿𝗱𝗮𝘆.

This isn’t a drop-in replacement for Transformers — 𝗶𝘁’𝘀 𝗮 𝗱𝗶𝗿𝗲𝗰𝘁𝗶𝗼𝗻, 𝗻𝗼𝘁 𝗮 𝗱𝗲𝘀𝘁𝗶𝗻𝗮𝘁𝗶𝗼𝗻 (𝘆𝗲𝘁).

Reference:

Google Research: Introducing Nested Learning: A new ML paradigm for continual learning